Chalmers University of Technology

Data Science and AI Division

changheon.han(at)chalmers.se

Experience

Changheon Han is a PhD student at Chalmers University of Technology.

I am a Ph.D. student at Chalmers University of Technology, affiliated with WASP-HS (Wallenberg AI, Autonomous Systems and Software Program – Humanity and Society). My research focuses on learned cultural representation spaces in generative AI for creative domains. Learn more about my research here.

Research keywords include: Multimodal Learning, Signal Processing, Natural Language Processing, Music Information Retrieval.

Before starting my Ph.D., I was a Research Engineer at Singapore Management University working on career trajectory analysis using dynamic GNNs. I completed a research internship at SONY Europe, where I worked on fine-grained instrument music source separation using text-audio multimodal encoders. I received my M.S. in Artificial Intelligence from Hanyang University in 2025, advised by Prof. Minsam Ko. Prior to academia, I worked as a Data Analyst at Coupang and spent 7 years as a professional music producer, with tracks featured in Spotify K-pop Editorial Playlists reaching over 800K streams.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "News

Research Highlights

Optimizing Music Source Separation in Complex Audio Environments Through Progressive Self-Knowledge Distillation

ChangHeon Han, SuHyun Lee

ICASSP 2024 Workshops (ICASSPW) Technical Report

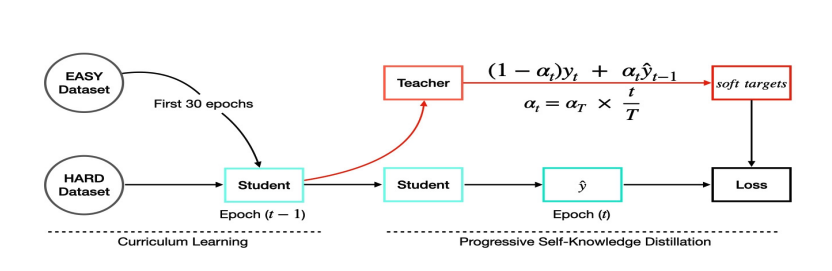

This technical report presents a fine-tuning strategy for hearing-aid-oriented source separation, where stereo signal entanglement makes training unstable. By softening targets using predictions from the previous epoch, the proposed method improves SDR by 1.2 dB over the baseline.

# music source separation # knowledge distillation # audio

Optimizing Music Source Separation in Complex Audio Environments Through Progressive Self-Knowledge Distillation

ChangHeon Han, SuHyun Lee

ICASSP 2024 Workshops (ICASSPW) Technical Report

This technical report presents a fine-tuning strategy for hearing-aid-oriented source separation, where stereo signal entanglement makes training unstable. By softening targets using predictions from the previous epoch, the proposed method improves SDR by 1.2 dB over the baseline.

Track Role Prediction of Single-Instrumental Sequences

ChangHeon Han, SuHyun Lee, Minsam Ko

ISMIR 2023 Late-Breaking/Demo (LBD)

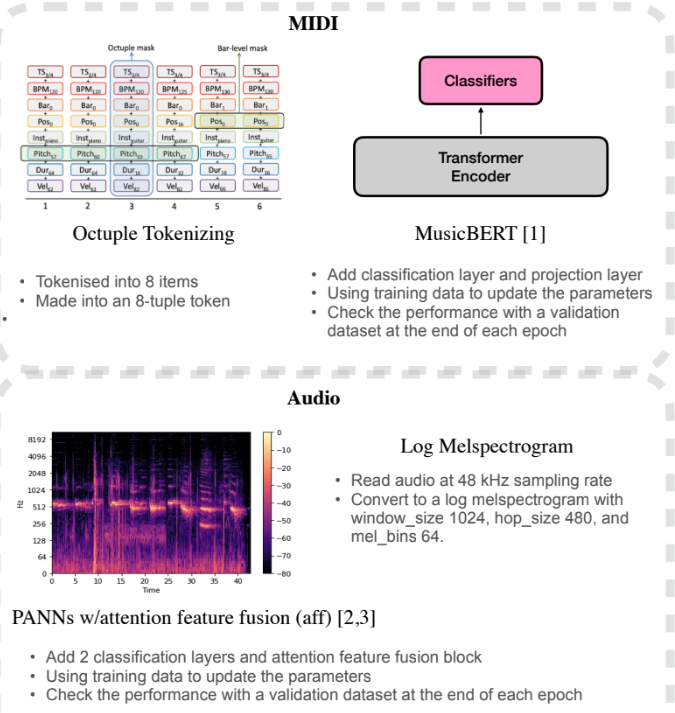

This work proposes a deep learning model that automatically predicts the track role of single-instrument music sequences, reducing the need for manual annotation. The model achieves 87 percent accuracy in the symbolic domain and 84 percent in the audio domain, demonstrating its potential for AI-based music generation and analysis.

# music information retrieval # sequence modeling

Track Role Prediction of Single-Instrumental Sequences

ChangHeon Han, SuHyun Lee, Minsam Ko

ISMIR 2023 Late-Breaking/Demo (LBD)

This work proposes a deep learning model that automatically predicts the track role of single-instrument music sequences, reducing the need for manual annotation. The model achieves 87 percent accuracy in the symbolic domain and 84 percent in the audio domain, demonstrating its potential for AI-based music generation and analysis.