Music and creative content carry rich cultural meanings that are often expressed through multiple modalities—audio signals, lyrics, symbolic representations, and contextual metadata. Yet, teaching machines to understand and generate such content remains challenging, as it requires not only processing diverse data types but also capturing the nuanced cultural and emotional contexts embedded within them.

My research focuses on understanding how AI models learn and represent cultural expressions in creative domains. I investigate how models encode cultural meanings in their learned representations, and how we can interpret and understand these representations. By bridging modalities through multimodal learning, analyzing musical structure via music information retrieval, and leveraging techniques from signal processing and natural language processing, I aim to make AI's understanding of creative content more interpretable and aligned with human perception.

2025

STAR: Strategy-Aware Refinement Module in Multitask Learning for Emotional Support Conversations

SuHyun Lee, ChangHeon Han, Woohwan Jung, Minsam Ko

NLP for Positive Impact Workshop (ACL)

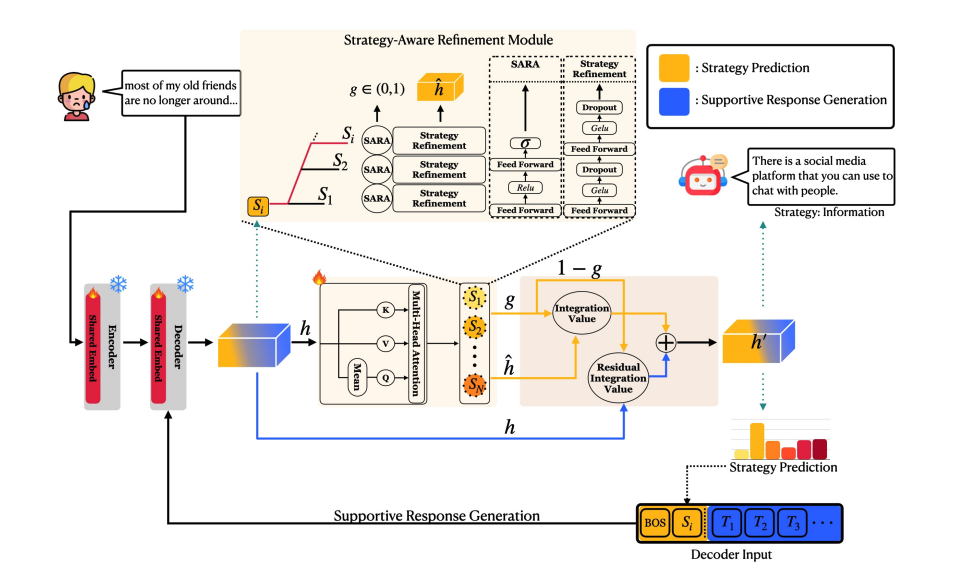

This work proposes STAR, a strategy-aware refinement module that mitigates task interference in emotional support dialogue systems. By disentangling decoder representations and dynamically fusing task-specific information, the model achieves state-of-the-art performance in both strategy prediction and response generation.

# emotional support # multitask learning # NLP

STAR: Strategy-Aware Refinement Module in Multitask Learning for Emotional Support Conversations

SuHyun Lee, ChangHeon Han, Woohwan Jung, Minsam Ko

NLP for Positive Impact Workshop (ACL)

This work proposes STAR, a strategy-aware refinement module that mitigates task interference in emotional support dialogue systems. By disentangling decoder representations and dynamically fusing task-specific information, the model achieves state-of-the-art performance in both strategy prediction and response generation.

Sentimatic: Sentiment-guided Automatic Generation of Preference Datasets for Customer Support Dialogue System

SuHyun Lee, ChangHeon Han

NAACL 2025

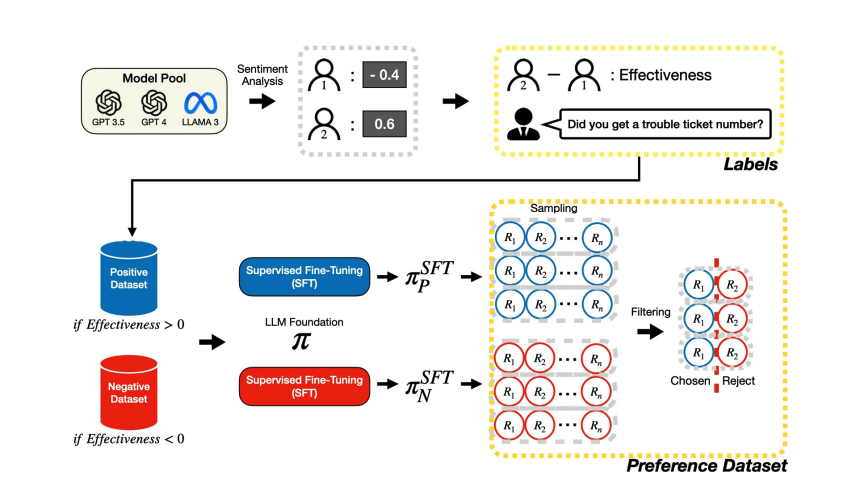

Sentimatic introduces an automatic framework for generating preference datasets for preference optimization without human annotation. Using sentiment-guided classification and controlled sampling, the method produces large-scale high-quality preference data and improves emotional appropriateness in AI customer support systems.

# dialogue systems # preference data # NLP

Sentimatic: Sentiment-guided Automatic Generation of Preference Datasets for Customer Support Dialogue System

SuHyun Lee, ChangHeon Han

NAACL 2025

Sentimatic introduces an automatic framework for generating preference datasets for preference optimization without human annotation. Using sentiment-guided classification and controlled sampling, the method produces large-scale high-quality preference data and improves emotional appropriateness in AI customer support systems.

2024

ASMR Sound Generation through Attribute-based Prompt Augmentation

ChangHeon Han, Jaemyung Shin, Minsam Ko

Korea Computer Congress 2024 (KCC)

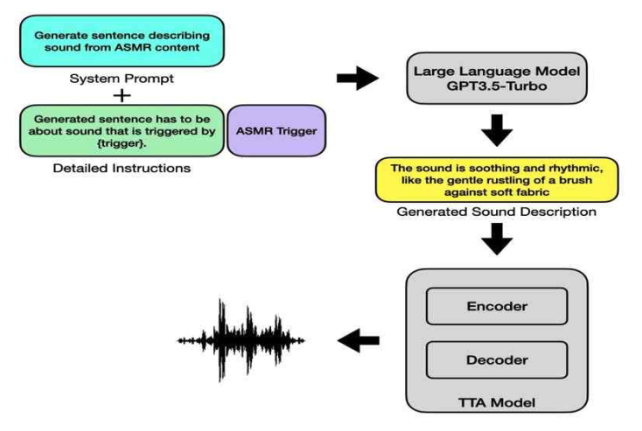

This study proposes an LLM-based prompt augmentation method for improving ASMR audio generation in Text-to-Audio models. By explicitly modeling ASMR triggers and key attributes such as action and material, the method produces more detailed sound descriptions and improves both generation quality and user experience.

# text-to-audio # prompt augmentation # ASMR

ASMR Sound Generation through Attribute-based Prompt Augmentation

ChangHeon Han, Jaemyung Shin, Minsam Ko

Korea Computer Congress 2024 (KCC)

This study proposes an LLM-based prompt augmentation method for improving ASMR audio generation in Text-to-Audio models. By explicitly modeling ASMR triggers and key attributes such as action and material, the method produces more detailed sound descriptions and improves both generation quality and user experience.

Optimizing Music Source Separation in Complex Audio Environments Through Progressive Self-Knowledge Distillation

ChangHeon Han, SuHyun Lee

ICASSP 2024 Workshops (ICASSPW) Technical Report

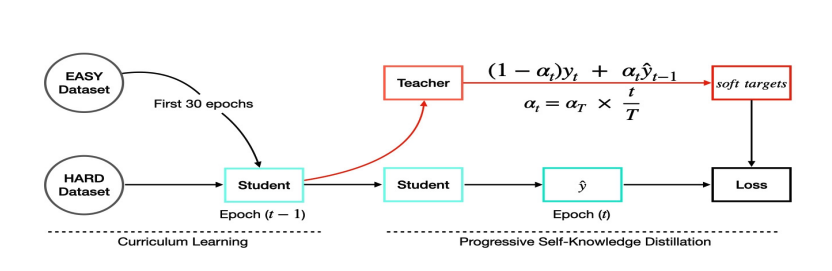

This technical report presents a fine-tuning strategy for hearing-aid-oriented source separation, where stereo signal entanglement makes training unstable. By softening targets using predictions from the previous epoch, the proposed method improves SDR by 1.2 dB over the baseline.

# music source separation # knowledge distillation # audio

Optimizing Music Source Separation in Complex Audio Environments Through Progressive Self-Knowledge Distillation

ChangHeon Han, SuHyun Lee

ICASSP 2024 Workshops (ICASSPW) Technical Report

This technical report presents a fine-tuning strategy for hearing-aid-oriented source separation, where stereo signal entanglement makes training unstable. By softening targets using predictions from the previous epoch, the proposed method improves SDR by 1.2 dB over the baseline.

2023

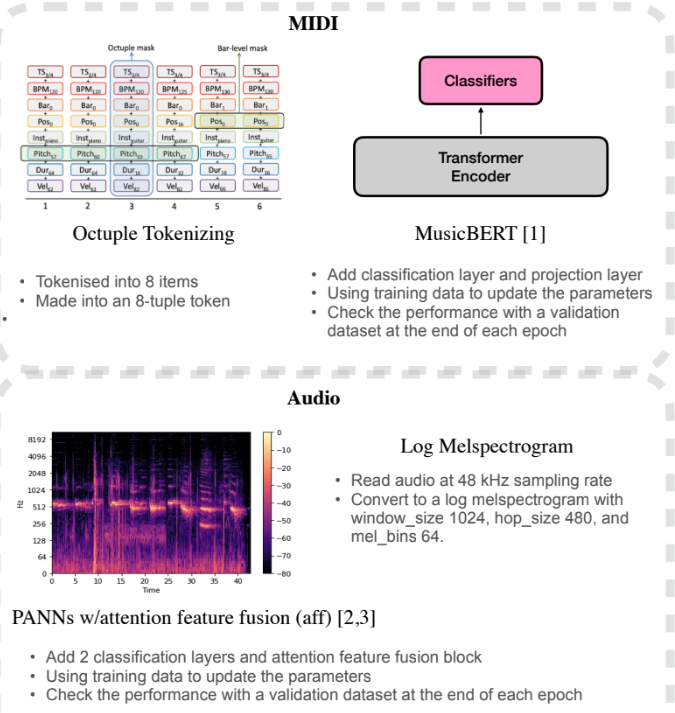

Track Role Prediction of Single-Instrumental Sequences

ChangHeon Han, SuHyun Lee, Minsam Ko

ISMIR 2023 Late-Breaking/Demo (LBD)

This work proposes a deep learning model that automatically predicts the track role of single-instrument music sequences, reducing the need for manual annotation. The model achieves 87 percent accuracy in the symbolic domain and 84 percent in the audio domain, demonstrating its potential for AI-based music generation and analysis.

# music information retrieval # sequence modeling

Track Role Prediction of Single-Instrumental Sequences

ChangHeon Han, SuHyun Lee, Minsam Ko

ISMIR 2023 Late-Breaking/Demo (LBD)

This work proposes a deep learning model that automatically predicts the track role of single-instrument music sequences, reducing the need for manual annotation. The model achieves 87 percent accuracy in the symbolic domain and 84 percent in the audio domain, demonstrating its potential for AI-based music generation and analysis.

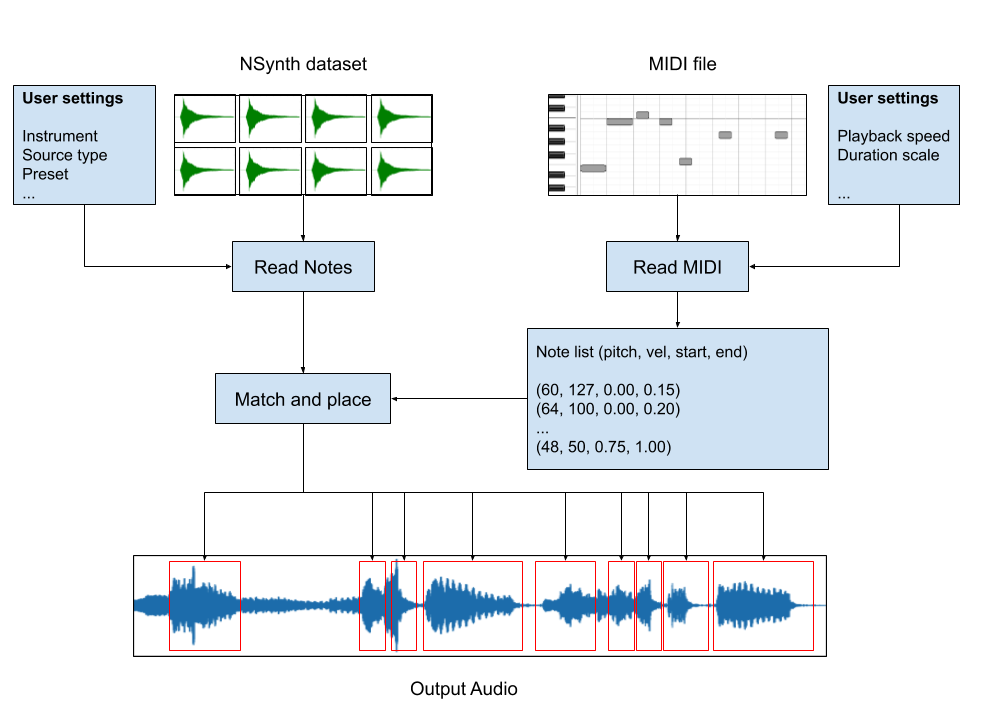

NSynth-MIDI-Renderer for massive MIDI dataset

Project

Open-source tool that assigns candidates in the NSynth dataset to lock in a single preset across a MIDI sequence.

# music information retrieval # midi # dataset

NSynth-MIDI-Renderer for massive MIDI dataset

Project

Open-source tool that assigns candidates in the NSynth dataset to lock in a single preset across a MIDI sequence.

MIDI-Metadata-Extractor

Project

Segregates a multi-track MIDI file into individual instrument tracks, extracts detailed metadata for each track, and supports truncation/saving by a specified number of measures.

# music information retrieval # midi # tools

MIDI-Metadata-Extractor

Project

Segregates a multi-track MIDI file into individual instrument tracks, extracts detailed metadata for each track, and supports truncation/saving by a specified number of measures.

2022

Soothing Sound White Noise Generator

Project

Developed a soothing sound audio generation program with five distinct noise colors and three weighting filters (copyrighted with the Korea Copyright Commission).

# audio # signal processing # tools

Soothing Sound White Noise Generator

Project

Developed a soothing sound audio generation program with five distinct noise colors and three weighting filters (copyrighted with the Korea Copyright Commission).